Authored by Ben Clothier

This is a continuation of series Juan started exploring using stored procedures with Access. To see the previous articles, visit part 1 and part 2.

If you have been following the series, Juan already has walked you through how to use a stored procedure on the Access side. We’ll now turn our attention to how we would return a resultset from a stored procedure. In a simple stored procedure, we could just do a SELECT statement and that’s our resultset, but what about more complex stored procedures where we may not be able to easily express the resultset in a single SQL statement and still be able to read the SQL code months later? We’ll look at 3 alternatives in addition to doing a simple SELECT statement to return a resultset from a stored procedure; common table expressions, table variables and temporary tables and the implications they hold.

Looking at usp_QuickMetrics procedure

Juan showed how we would invoke a stored procedure and get a recordset. The key point being a stored procedure can bring together a wide range of queries and data points to a single table, Access can use in a dashboard. We’ll use this as a sample for considering the benefits and consequences of how we return the resultset and/or hold the data during processing. For your convenience, we’ve attached a SampleSalesDB DDL script you can download and re-create. You may have noticed that for this stored procedure, there is no parameters. You also could see that we had 3 distinct ranges; the current week, the current month and year to the date applied against 3 different entities; customers, orders and shipments. If we were doing it without any stored procedure, that would be 9 separate queries. However, using some wonderful features of T-SQL, we can consolidate 3 queries into one. We’ll be looking at how we can use table variable and common table expressions to enhance our stored procedures.

Defining the resultset

By design, stored procedures will return all results of any SELECT statement back to the client. Typically, we only need the final resultset, hence the importance of setting NOCOUNT ON. In this case, though, we’re not returning anything that exists in the database and for reasons that will become clear later on, we need to have a table to hold the intermediate processing so we can then return all in a single resultset. We can do it 3 different ways; a temporary table, a table variable and a common table expression (CTE). I should haste to point out that CTE by itself isn’t a true source that can be returned – its advantage lies in simplifying what otherwise would be a needlessly complex query so we can then return a complex result and without writing T-SQL that would be indecipherable. For this stored procedure, we’ll actually use both table variable and CTEs. Be aware that the choices you make also have impact on performance and we’ll talk about it after we’ve looked at those 3 methods.

Simplifying with Common Table Expressions

One oft used feature in our T-SQL code easily could be CTEs. If you’re not familiar with the construct, it would look something similar to this:

WITH myCTE AS ( <SELECT SQL statement...> ) <Single SQL statement...>;

This is much easier to read than the traditional approach of using a derived table defined in a FROM clause. Similar to views, a CTE will have the same execution plan as a SQL statement expressed using derived tables within the SELECT and/or FROM clause. CTEs are a great way to make a «disposable view» that isn’t needed by anything else but this one stored procedure.

I want to point out one important thing that may trip even those familiar with T-SQL language: Whenever you use a CTE, a preceding semicolon is required. Therefore, if you’re in habit of writing T-SQL without a terminating semicolon (which has been optional until recently). The WITH keyword, along with few other newer T-SQL kewyords, can mean different things and without a semicolon to terminate the preceding statement, parser cannot tell if you’re adding a hint or a CTE. Some people like to adapt this style:

;WITH myCTE AS ( ...

so they don’t have to add semicolons to every single SQL statement.

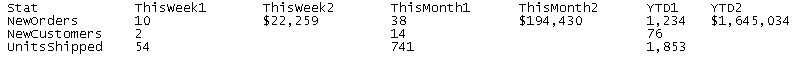

If we go back and look at the expected outcome:

We need to collect some data on the orders, specifically number of new orders made during a certain date range and sum of the charges for those orders. Because of different date ranges, we have to count/sum 3 times. With CTE, we can ensure that we work with the same subset and thus simplifying our code:

WITH OrderBase AS ( -- Base CTE, 3 next CTEs will derive from this SELECT OrderID, OrderDate, OrderTotal FROM dbo.tblOrders WHERE Cancelled = 0 AND OrderDate >= @ThisYear AND OrderDate < @Tomorrow ), NewOrdersThisWeek AS ( -- First CTE SELECT COUNT(OrderID) AS NewOrder, SUM(OrderTotal) AS OrderTotal FROM OrderBase WHERE OrderDate >= @ThisWeek AND OrderDate < @NextWeek ), NewOrdersThisMonth AS ( -- Second CTE SELECT COUNT(OrderID) AS NewOrder, SUM(OrderTotal) AS OrderTotal FROM OrderBase WHERE OrderDate >= @ThisMonth AND OrderDate < @NextMonth ), NewOrdersYTD AS ( -- Third CTE SELECT COUNT(OrderID) AS NewOrder, SUM(OrderTotal) AS OrderTotal FROM OrderBase ) -- Done defining CTEs, now to process actual query INSERT INTO @Res ( Stat, ThisWeek1, ThisWeek2, ThisMonth1, ThisMonth2, YTD1, YTD2 ) SELECT 'New Orders', tw.NewOrder, tw.OrderTotal, tm.NewOrder, tm.OrderTotal, ytd.NewOrder, ytd.OrderTotal FROM NewOrdersThisWeek AS tw -- Refer to the respective 3 CTEs CROSS JOIN NewOrdersThisMonth AS tm CROSS JOIN NewOrdersYTD AS ytd;

The first thing I want to point out is that we’ve defined a total of four common table expressions; OrderBase, NewOrdersThisWeek, NewOrdersThisMonth and NewOrdersYTD. The OrderBase gives us the subset of the tblOrders table we actually need to perform our counts & sums for all ranges as well applying a common criteria (e.g. excluding all cancelled orders). The subsequent 3 CTEs use OrderBase in their FROM. You can see that the 3 CTEs are almost identical expect for their WHERE clauses. Finally, note that we used the variables to mark the start and the end of range where we want sums/counts to be.

More importantly, you got to read the SQL from top to bottom. That is a big improvement from the traditional approach where you would write a sub-SELECT inside the outer SELECT or FROM, requiring you to read your SQL from inside out and you don’t have to repeat the same common criteria (e.g. excluding the cancelled orders) which just add to the noise or create one-trick views.

I want to step aside and point out that we also can re-express those 3 CTEs as a table-valued function, which we can look over in a later blog post but for now, let’s say that we have no need for dynamic parameters that a table-valued function would offer. If our sample stored procedure only needed metrics on orders, we could certainly omit the INSERT INTO clause and use the outermost SELECT statement to return our resultset.

Table Variables rock for intermediate results!

In some cases, using CTEs and returning a final SELECT statement is all we need for a stored procedure. For this sample stored procedure, though, we have more than one row, so we need a way to hold onto the data as we build up the resultset. That is where table variables excel. Unlike CTEs, it can persist for the whole procedure body rather than a single statement. In older version of SQL Server, we used temporary tables but it had a big downside – when we created a temporary table, it’s actually saved to the hard drive and inserting into a temporary table mean we’re also writing into the disk. Because the hard drive is typically the slowest subsystem of a database server, it’s desirable to be able to do this in memory.

In theory, table variable is a wholly in-memory construct, which may mean substantial improvement in performance. Why the «in theory» disclaimer? Well, it may be in-memory but there’s no guarantee it actually stays in memory. If SQL Server is under pressure and need more memory than it has, it may end up paging out (e.g. writing to the disk) the table variable. In this sample stored procedure, we have a fixed size resultset so it’s pretty safe to expect that it won’t consume too much memory, which makes it less likely that it’ll be paged out. Considerations also need to be given toward how long the table variable will exist. If a stored procedure is a long-running and it has to run in midst of a busy server, there’s higher chance that paging may occur.

Declaring a table variable is very similar to a CREATE TABLE statement, except that we DECLARE and use a parameter naming convention:

DECLARE @Res TABLE ( Stat VARCHAR(20), ThisWeek1 INT, ThisWeek2 MONEY, ThisMonth1 INT, ThisMonth2 MONEY, YTD1 INT, YTD2 MONEY );

We don’t need primary key or indexes because in the end, we’ll be reading the whole table so indexing is of no help here. At the end of the stored procedure, we do this:

SELECT * FROM @Res;

You already saw that we used CTEs with an INSERT INTO query to add a new row into the table variable @Res.

The good old standby, temporary tables

Temporary tables have been featured in SQL Server for a very long time and is more like a permanent table than a variable, which may be a good or bad thing. As mentioned, when a new temporary table is created, it’s written to disk within tempdb database. You can add any additional modification or constraints on the temporary table as you please, you even can do an ALTER TABLE on a temporary table. Not all of this is true with a table variable. Another possible selling point is that a temporary table’s scope can be more broader than just a single procedure. If programmed correctly, a called stored procedure can access the same temporary table created by the calling stored procedure. That isn’t the case with the table variables. One more advantage for temporary table is that it can be a target of SELECT INTO or INSERT INTO EXEC statements. That’s not possible with a table variable. Here’s a sample highlighting temporary table’s strengths:

SELECT *, CASE WHEN PackageWeight BETWEEN 0 AND 1 THEN 1 WHEN PackageWeight BETWEEN 1 AND 3 THEN 2 WHEN PackageWeight BETWEEN 3 AND 10 THEN 3 WHEN PackageWeight > 10 THEN 4 ELSE 0 END AS 'PackageClassCode' INTO #tmpShipments FROM tblShipments; CREATE INDEX idx_tmp_Shipments_PackageClassCode ON #tmpShipments (PackageClassCode); SELECT PackageClassCode, COUNT(ShipmentID) FROM #tmpShipments GROUP BY PackageClassCode; DROP TABLE #tmpShipments;

In this admittedly simplified example, we can add a index to the temporary table and therefore boost performance of subsequent queries. A table variable can be indexed but only if you can define it within the DDL. The index we just created for #tmpShipments wouldn’t be possible against a table variable because we’re using CREATE INDEX. PRIMARY KEY and UNIQUE are examples of indexes that can be included in the table DDL and therefore available to table variables.

So, how do I choose? It depends…

…whether if you’re using SQL Server Express

Express is a great way to get the benefits of SQL Server without shelling for a full license, and in many cases we use it with our small business clients. But Express has a series of limitions:

- Works with only one CPU, ignores all other CPUs on the chip

- Works with only 1 GB of memory, no matter how much memory is installed on the server

- Databases can only reach 10 GB in size

For more info on Express’s limitations click here.

….whether you’re keeping it simple

Given the limitations above you owe it to yourself to test using real world conditions all three scenarios in your SP: CTE, table variables and temp tables before selecting the correct approach for your stored procedure. A good general strategy is to work with simplest process as possible. The SQL Server optimizer usually does a good job of compiling an optimal plan that works well enough and we can address any performance issue once we’ve identified where slowdowns are occurring. Most importantly, because it was written simply, it’s easier to introduce modifications to improve performance.

…on what you are doing

For our running example, we basically consolidated a series of SELECT queries so for this case, CTEs & table variables met our need perfectly. But if we had a different set of requirements, perhaps one that required more complex aggregating & grouping, then we may have found it more beneficial to use temporary tables. It’s also important to note that it’s more likely that your stored procedure is going to be influenced much more by whether your query is sargable or has all indexes it needs than whether you’re using table variable or temp table to return your resultset.

…whether it’s right size

We also have to remember that there’s a possibility that in future, the data set may grow and therefore change the statistics to a point that what worked fine may be no longer acceptable. At the end of day, the user are not going to be impressed by whether you used CTE or table variables. What they see and therefore care about is the waits between them clicking that proverbial button and the data materializing on their screen. It also illustrates why it’s usually best to start out simple, even if it’s not the most efficient; when time comes for a extra boost, it’s easier to adapt a simple & readable stored procedure.

We’ve only scratched a tiny surface of wonderful possibilities T-SQL has to offer us, and looked at some of performance implications behind choices. Because all options have several overlapping functions, it’s not easy to set up a formulaic rule of which to use what. We have to consider first our intention in the stored procedure, then whether we need to cache the intermediate results and finally whether the intermediate result’s size is large enough to warrant residing on disk and indexing. Then, of course, test the procedure.

Happy stored proceduring!

This is a continuation of series Juan started exploring using stored procedures with Access. To see the previous articles, visit part 1 and part 2.

Deja tu comentario